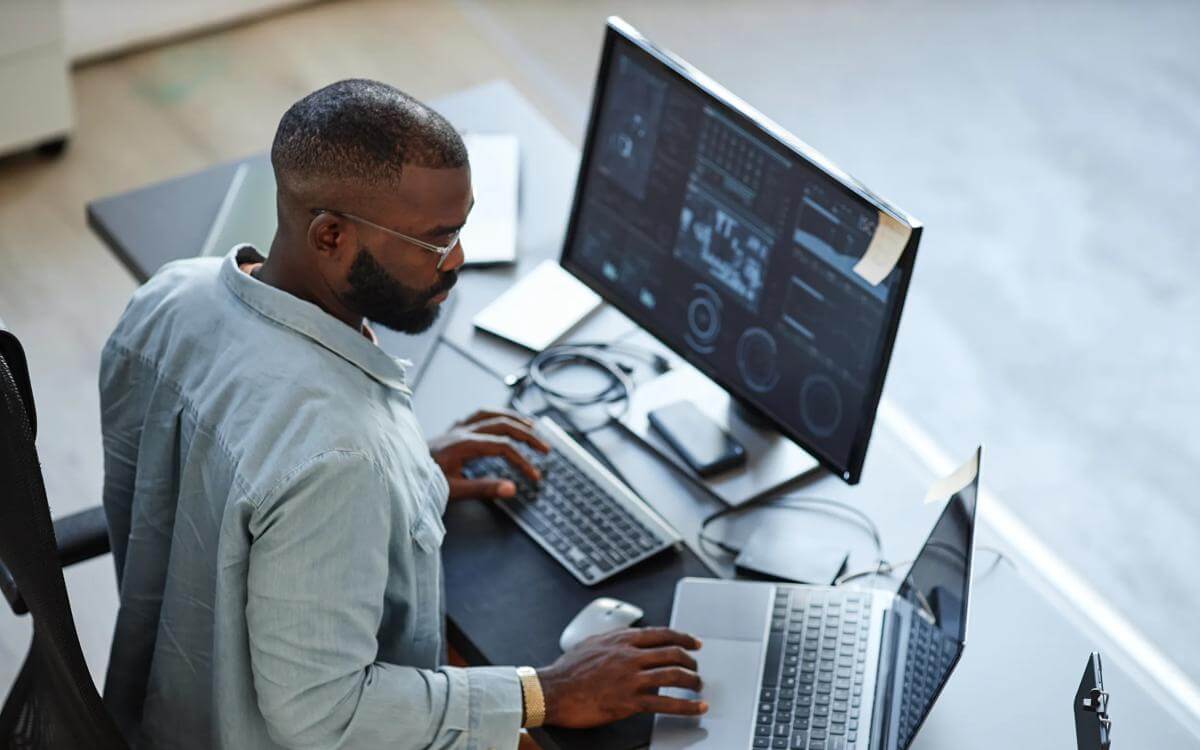

The adoption and use of AI is now ubiquitous, with AI systems - both commercial off-the-shelf as well as bespoke solutions - being procured directly by businesses with the aim to, amongst other things, create efficiencies, costs-savings, mitigate risk, enable more insightful strategic decision making and gain a competitive commercial advantage through improved or redesigned workflows and/or enhanced data analytics.

The same is expected where business processes or functions are outsourced to a third-party provider. It will be rare that the services will not, at least in part, be underpinned by some form of AI system.

As such, it is particularly important to consider the role of AI systems on a headline basis (i.e. where the customer has direct access to and use of the AI system) or as a component of a wider service offering (i.e. used in the background by a supplier to drive service delivery efficiencies) when negotiating and agreeing outsourcing transactions.

Key legal considerations for AI system outsourcing

Whilst many of the provisions typically found in a comprehensive English law governed outsourcing agreement will be equally applicable regardless of whether it is an AI or other technology which is being procured or deployed in service delivery, the way in which AI systems are developed and operate creates unique risks which require understanding and careful consideration of the AI in question.

For example, from a customer’s perspective, some key legal questions to ask at the outset, understand and (where applicable) reflect in the outsourcing contract relate to:

1. Provider

- Who is the 'provider' of the AI system, the contracting entity or a third party who the supplier contracting entity has procured the AI system from or 'deploys' (which will likely limit the terms upon which the contracting entity will commit to)?

2. Training

- On what data set has the AI system been trained and tested (i.e. is that data accurate, reliable and unbiased and is it representative of the data that will be processed within the outsourced solution), is top-up or periodic training required and on which data set and who is responsible for this?

- Have necessary rights or permissions to use training data from the copyright owners or licensors been obtained?

- Is any of the data the customer is to provide for training purposes confidential or commercially sensitive?

- Will any training data the customer provides be shared with the supplier’s other customers or users?

- Will any customer data (including any collected on an aggregated basis) be used by the supplier, or provided to the underlying system provider, for training the AI system?

3. Intellectual property

- On what basis is the user input, the AI model (and improvements), and the output made available to, and/or owned by, the customer?

- What contractual comfort (i.e. indemnity) will be given should the output and/or customer’s use of the AI system infringe a third party’s IP rights?

- Will the supplier need to integrate the AI system with, and/or reverse engineer, any of the customer’s proprietary or licensed applications (i.e. to provide outsourced support and maintenance services)?

- Has the customer obtained all the necessary licences/permissions or got contractual protections (e.g. restrictions on the supplier’s access/use and referencing specific applications only) to accommodate the outsourced services?

4. Accuracy

- How accurate and reliable is the output?

- Does the AI detect and avoid bias and/or hallucinations?

- Is the AI system verified – independently?

- To what extent and basis will the supplier accept responsibility for inaccurate results?

5. Explainability, transparency and governance

- Is the supplier or provider able to explain (not only at the outset but throughout the term of the contract) how the AI system operates and makes inferences/decisions?

- What level and frequency of human oversight is there?

- Is there a sufficient change process to accommodate material changes in the AI system throughout the life of contract?

6. Data

- Who is providing the input data – is it the supplier, customer, a third-party end user or a combination (i.e. as part of a generative AI system, the supplier or provider may also provide input data in addition to a user prompt in the form a system prompt and/or retrieval-augmented generation (RAG))?

- If web scraping is being used as part of the RAG process, is this compliant with applicable copyright laws?

- Where is the data (including any customer prompts) stored and for how long?

- Will data be transferred outside the organisation and/or jurisdiction and, if so, on what basis?

- Is any personal data being processed by the AI system in addition to user access details? If so, in the UK the Data Protection Act (2018) and the UK General Data Protection Regulation (UK GDPR) will apply.

7. Cost savings

- What are the anticipated costs savings over the life of the contract and, as a customer, are there sufficient contractual mechanisms to ensure that the customer benefits from the rapid development of an AI system (e.g. are there benchmarking or continuous improvement obligations on the supplier and, if so, what is the gainshare between the parties)?

- If there were a supplier breach, is there the ability to recover for the loss of those anticipated cost savings?

Given the length of time it takes from procurement stage through to finalising the contract on large-scale outsourcing projects, customers and suppliers should be having these conversations as early as possible (i.e. the customer should unsure these are covered in any request for proposal documentation) to ensure alignment on the key issues.

It is also essential that the AI system complies with all relevant laws and regulations. In the UK the current 'pro-innovation', sector-specific, principles-based approach, relies on existing legislation and regulators rather than creating any specific AI legislation. However, there are renewed attempts by members of the House of Lords to create such legislation in the form of the 'Artificial Intelligence (Regulation) Bill'. Although it is unlikely that this Private Members’ Bill will survive the scrutiny of the House of Commons, it is crucial for customers and suppliers to keep one eye on the outcome and any future government or regulator guidance on particular AI use cases.

In contrast, the European Union (EU) implemented the EU AI Act (Regulation (EU) 2024/1689), which adopts a comprehensive, tiered, risk-based classification approach with cross-sector application. Companies should be mindful of its broad, extra-territorial scope as part of a global outsourcing arrangement and should also pay attention to closely linked legislation, such as the Digital Operational Resilience Act (Regulation (EU) 2022/2554) which imposes requirements on financial entities to manage information and communication technology (ICT) risks – you can find more detail on this in our recent article 'DORA: Countdown to comply with January 2025 deadline'.

Conclusion

Against this evolving regulatory landscape and businesses increasingly procuring AI-enabled solutions, at least in the background, with commercial models being revised to reflect the technology – with reference to FTE/people costs likely to no longer be as relevant – outsourcing contracts are being reshaped. Please do contact us if you would like to discuss.

Ben Littlejohns

Senior Associate

ben.littlejohns@brownejacobson.com

+44 (0)330 045 1098