Damned if they do, damned if they don’t: Understanding and mitigating the risk of AI tools in healthcare practice

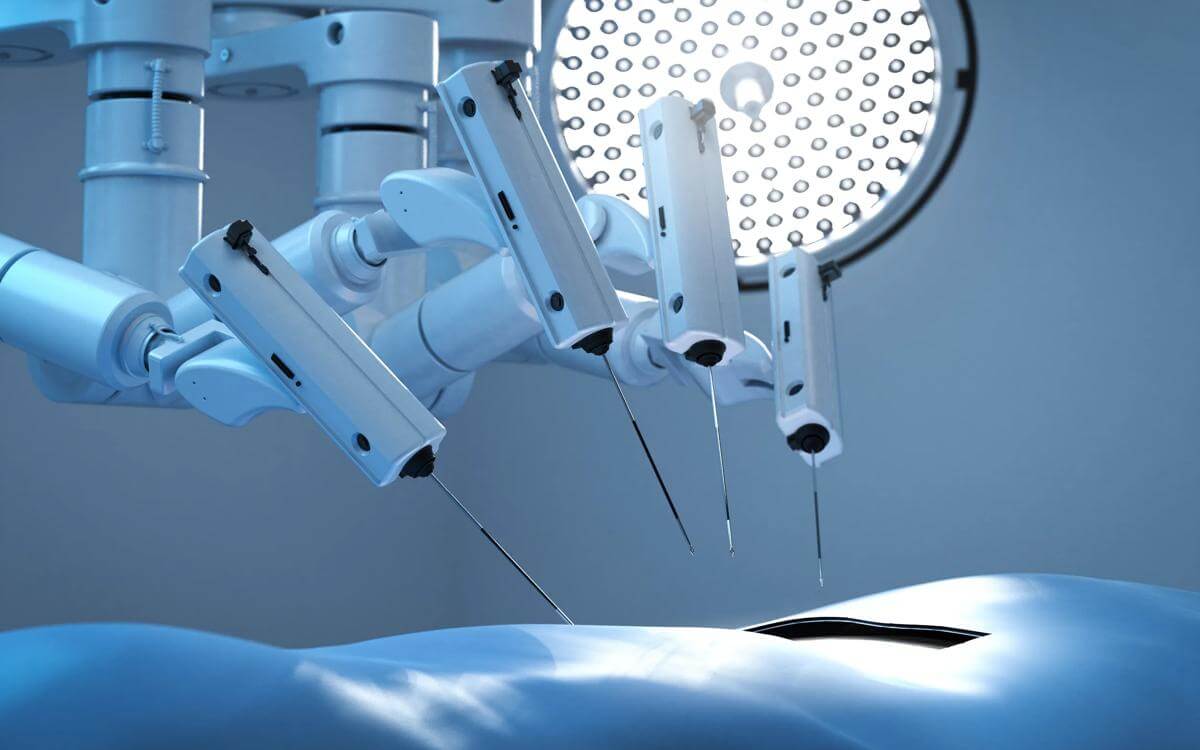

Artificial intelligence (AI) is rapidly changing the landscape of healthcare, with tools now widely used for diagnostics, triage, decision support, managing A&E demand, and improving administrative efficiency. These technologies promise greater consistency, faster results, and fewer human errors. However, they also introduce new risks, particularly around liability when mistakes occur.

A central issue is how AI will impact on medical claims and assessing standards of care. The standard of care for medical practitioners is set by the Bolam test, which asks whether a responsible body of professionals would have acted in the same way (Bolam v Friern Hospital Management Committee [1957] 1 WLR 582). The Bolitho case refined this by stating that professional opinions must also be logically defensible (Bolitho v City and Hackney Health Authority [1998] AC 232).

If a clinician follows AI advice that turns out to be wrong, Courts will need to consider whether relying on AI was logical and supported by a responsible body of medical opinion. Conversely a medical practitioner that does not use AI may face criticism if technology was available and not deployed. An example of the latter situation is with interpretation of radiology where certain AI programmes significantly improve visibility and detection of abnormalities.

As with any technology, AI is not infallible and continues to evolve. If clinicians fail to take reasonable steps to verify AI-generated advice, they may still be held liable. Courts are likely to examine whether the correct data was input, if the right tool was chosen, and what the expected output should have been. Avoiding AI altogether could also be questioned, especially if it might have led to a quicker or more accurate diagnosis.

Liability and accountability

This raises one of the biggest questions surrounding AI in healthcare which is, who is responsible?

Hospitals may be liable if they fail to test or validate AI systems.

Clinicians may be liable if they fail to exercise their own judgment, even when using AI tools.

AI developers may face liability if their software is found to be defective or misleading.

So, what happens if AI does get it wrong?

AI tools are only as good as the data input. Such issues can arise in relation to an AI tool being programmed poorly and outdated data being input.

These issues raise questions about product liability, procurement, and contractual indemnities. At present, there is no clear line of responsibility between healthcare providers, AI developers, and regulators when patients are harmed. Comprehensive policies are needed to clarify where liability lies and to ensure patient protection.

In the UK, AI in healthcare is regulated by the Medical Devices Regulation and GMC guidelines, but there is increasing demand for specific legislation to govern AI accountability. The government has proposed an AI regulatory framework focusing on transparency, accountability, and patient safety. Such consideration includes mandatory human oversight to ensure AI does not replace clinical judgement, audits to test AI systems for bias and accuracy, and clear, transparent explanations of AI decisions. However, experts say current rules do not keep pace with AI’s rapid growth, making patient protection and liability unclear.

Conclusion

In conclusion, clear and robust policies are essential to assign responsibility, mitigate risk, and protect patients as AI becomes more integrated into healthcare.

Hospitals and healthcare providers must review processes as AI continues to develop and ensure that appropriate guidance and training is in place for clinicians to use AI tools safely and effectively. From their own perspective, clinicians ought to be on notice to let their indemnifier know about the use of AI tools in practice to adequately manage risk exposure and ensure cover.

By addressing these areas, hospitals and clinicians can make the most of AI’s benefits while minimising risks and maintaining high standards of patient care.

At Browne Jacobson, our dedicated specialist teams are equipped to support you with a comprehensive range of services, including policy reviews, claims management, and the resolution of coverage disputes. We understand the complexities involved in these matters and are committed to providing tailored advice and practical solutions to meet your specific needs.

Contents

- Insurance insights: Medical malpractice matters, January 2026

- Healthcare insurers and indemnifiers: Post-2025 litigation learning

- Treatment as team effort: Medical and dental patient responsibility

- Medical tourism on the rise: What UK-based insurers need to know

- Private fertility clinics: Increasing incidents in ‘very safe’ sector

- Management of group action claims in medical negligence

Contact

Naiomh O'Reilly

Associate

naiomh.o'reilly@brownejacobson.com

+44 (0)330 045 1334